Unity Catalog In Databricks

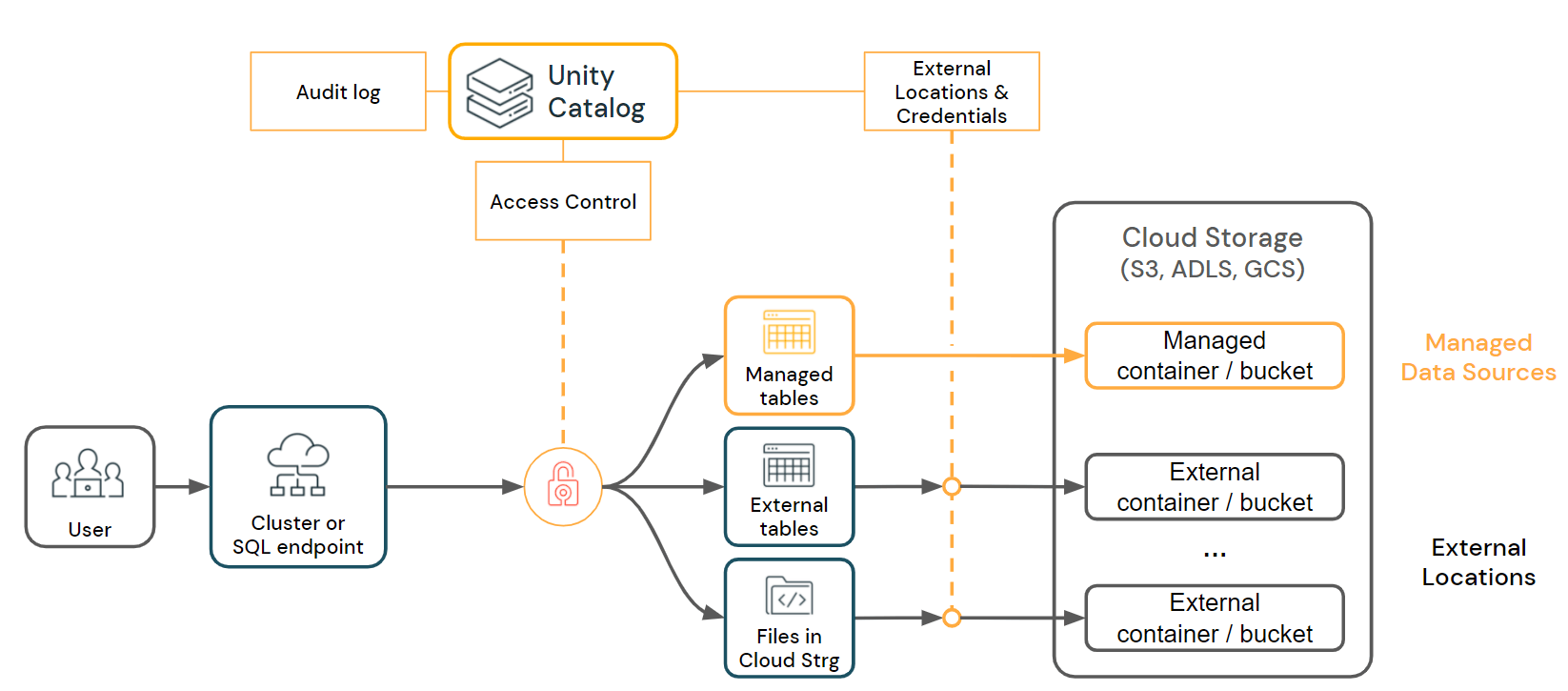

Unity Catalog In Databricks - It provides centralized access control, auditing, lineage, and data discovery. Unity catalog provides a suite of tools to configure secure connections to cloud object storage. Find out how to enable unity catalog, create a catalog, assign permissions, and access unity. A catalog is the primary unit of data organization in the databricks unity catalog data governance model. Unity catalog is a unified governance solution for data and ai assets on databricks. | databricks documentation

what are catalogs in databricks? It enables you to discover, access and collaborate on data and ai assets across any. These connections provide access to complete the following actions: Since its launch several years ago unity catalog has. This article gives an overview of. | databricks documentation

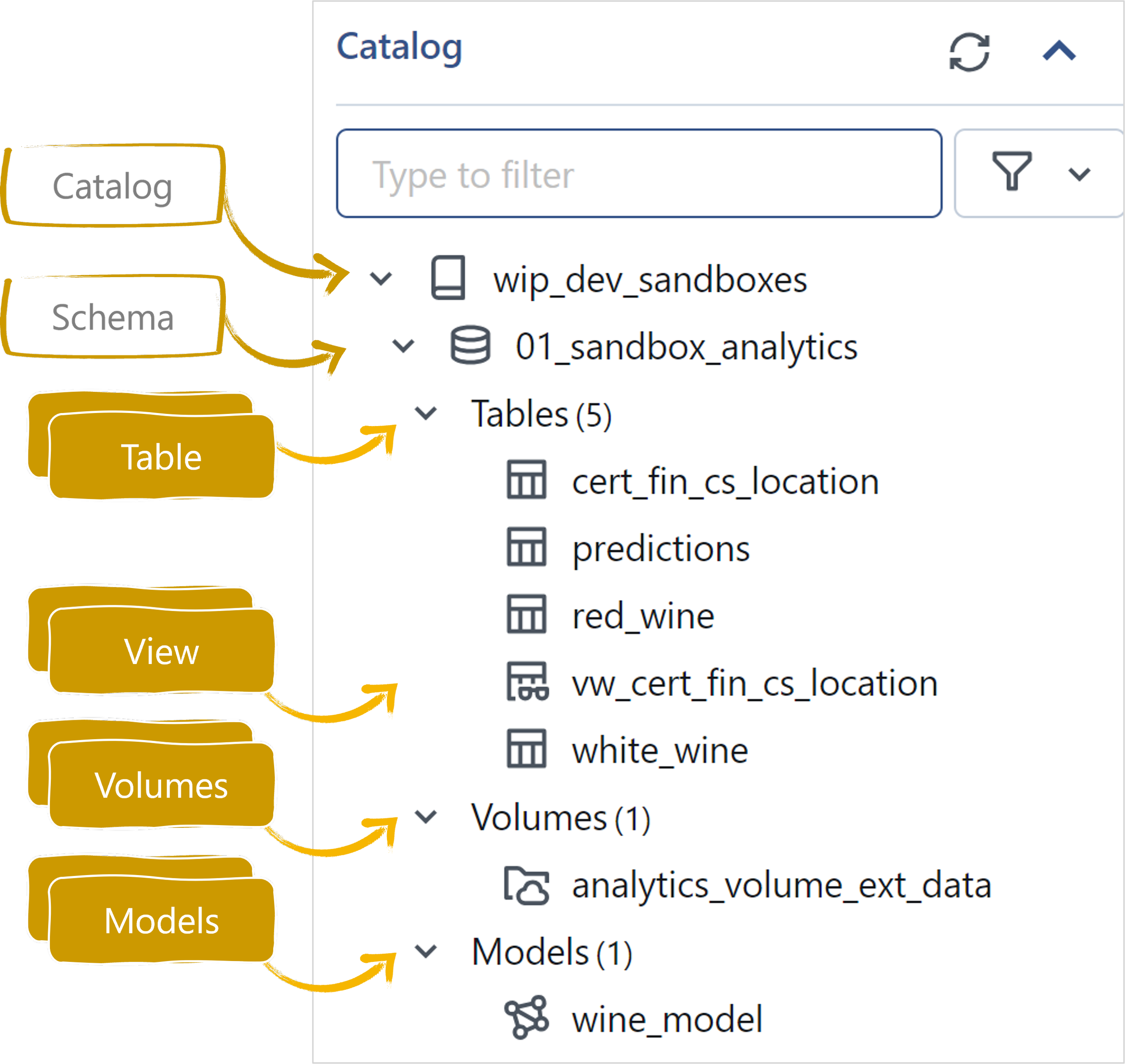

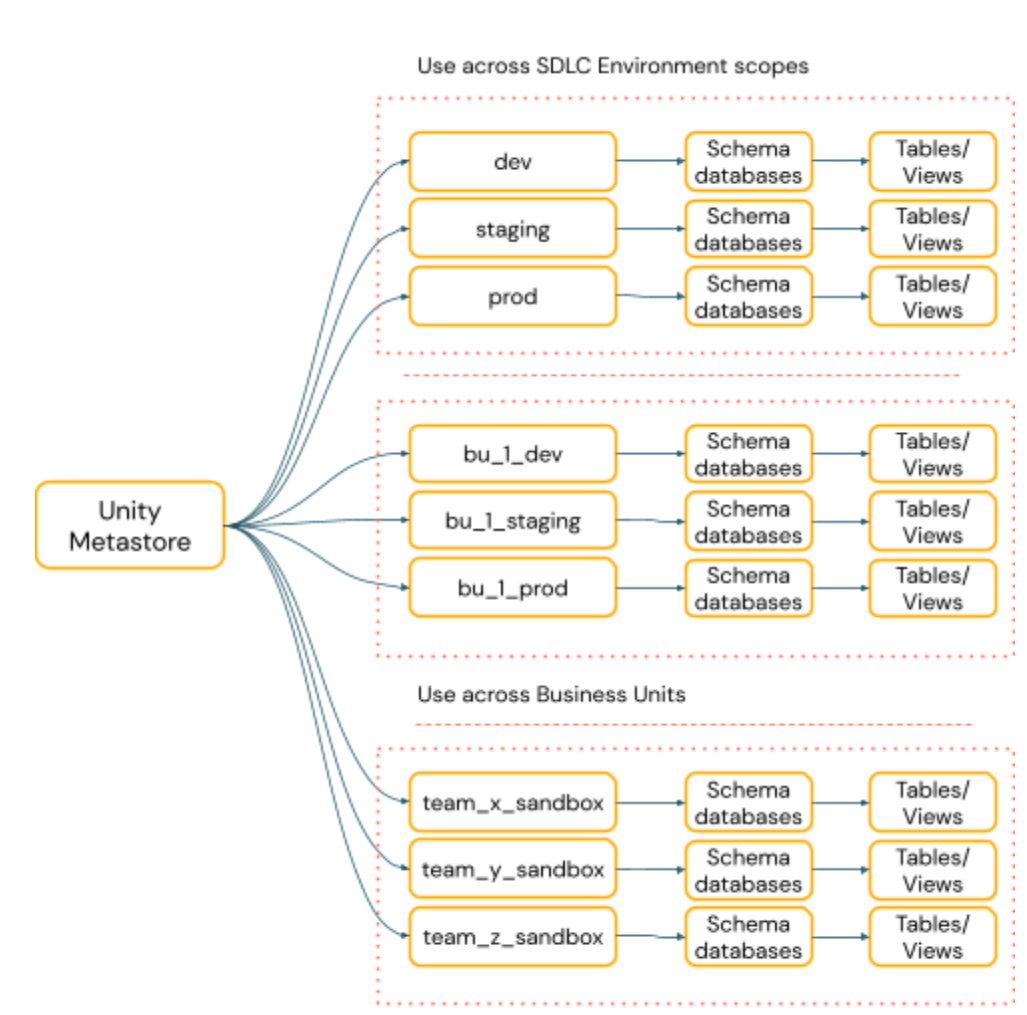

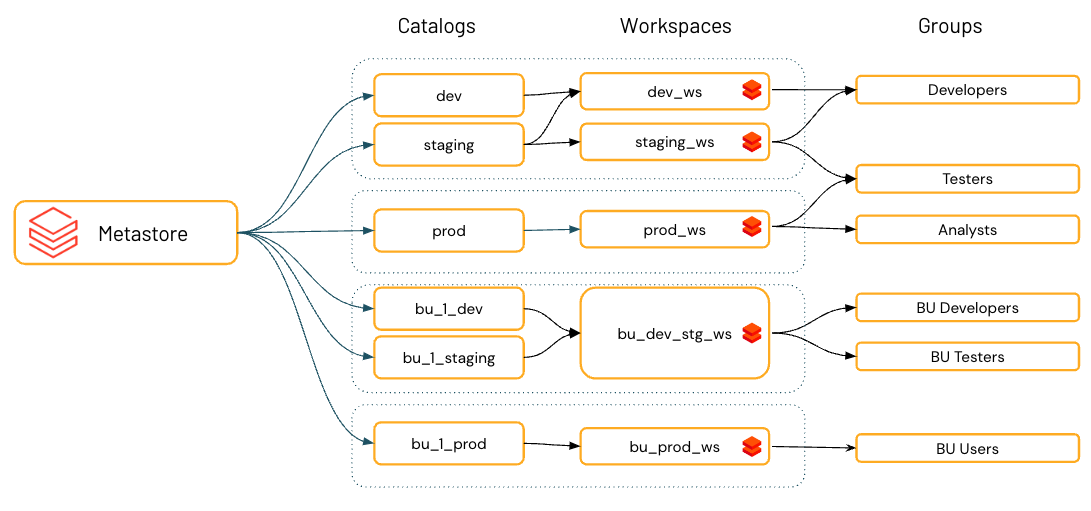

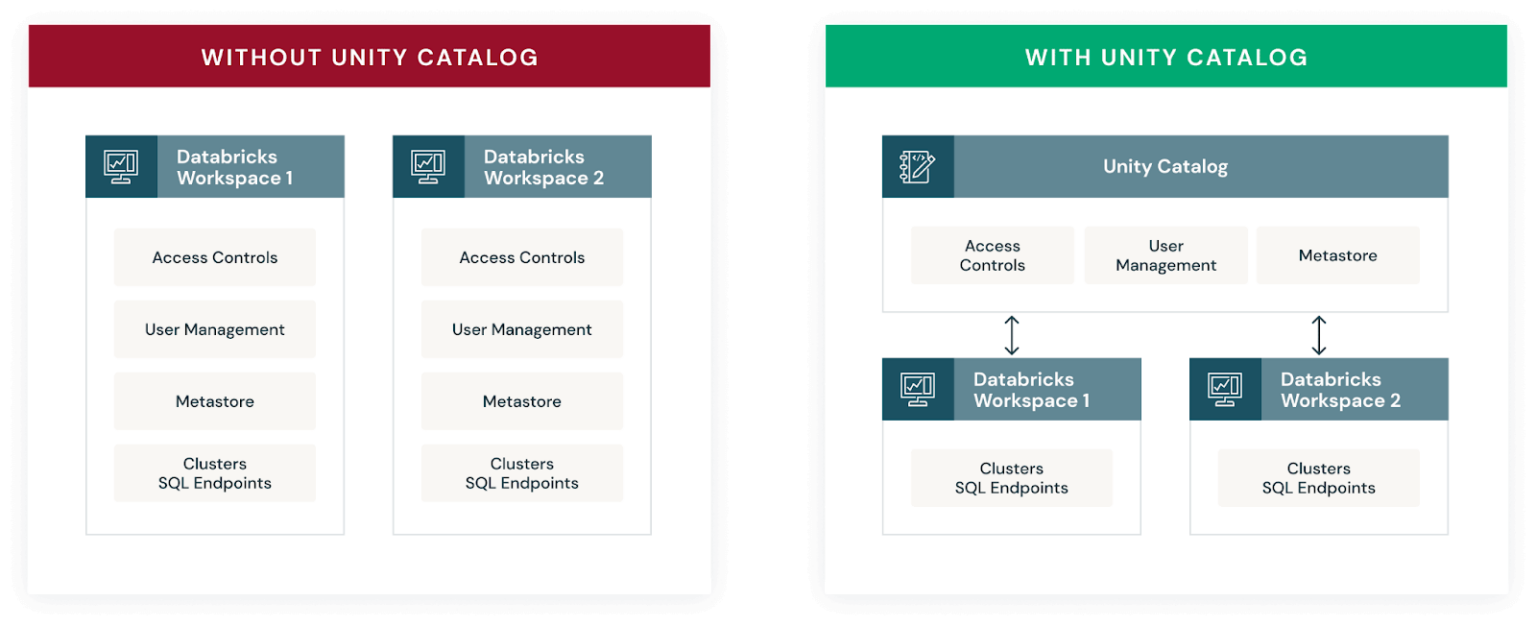

what are catalogs in databricks? What are catalogs in databricks? The following is based on my. Learn how to configure and use unity catalog to manage data in your databricks workspace. A catalog is the primary unit of data organization in the databricks unity catalog data governance model. They contain schemas, which in turn can contain tables, views, volumes, models, and. It provides centralized access control, auditing, lineage, and data discovery. Unity catalog provides a suite of tools to configure secure connections to cloud object storage. Unity catalog provides centralized access control, auditing, lineage, and data discovery. These connections provide access to complete the following actions: Unity catalog is a solution for data and ai governance built into the databricks data intelligence platform. To address these challenges, databricks introduced unity catalog, a unified governance solution designed for data lakehouses. Unity catalog (uc) is the foundation for all governance and management of data objects in databricks data intelligence platform. Unity catalog provides centralized access control, auditing, lineage,. To address these challenges, databricks introduced unity catalog, a unified governance solution designed for data lakehouses. Unity catalog is a unified governance solution for data and ai assets on databricks. These connections provide access to complete the following actions: Unity catalog is a solution for data and ai governance built into the databricks data intelligence platform. Unity catalog provides centralized. Unity catalog is a solution for data and ai governance built into the databricks data intelligence platform. These connections provide access to complete the following actions: First, we’re thrilled to announce that dlt pipelines now integrate fully with unity catalog (uc). To address these challenges, databricks introduced unity catalog, a unified governance solution designed for data lakehouses. Ingest raw data. A catalog is the primary unit of data organization in the databricks unity catalog data governance model. This article gives an overview of. Learn how to view, update, and delete catalogs in unity catalog using catalog explorer or sql commands. These connections provide access to complete the following actions: The following is based on my. First, we’re thrilled to announce that dlt pipelines now integrate fully with unity catalog (uc). What are catalogs in databricks? Unity catalog provides centralized access control, auditing, lineage, and data discovery.videos of unity catalog in databricks bing.com › videoswatch video17:29databricks unity catalog: Unity catalog is a unified governance solution for data and ai assets on databricks. These connections provide access. A catalog is the primary unit of data organization in the databricks unity catalog data governance model. Unity catalog is a solution for data and ai governance built into the databricks data intelligence platform. It provides centralized access control, auditing, lineage, and data discovery across. This allows users to read from and write to multiple catalogs and schemas while. Ingest. This article gives an overview of. To address these challenges, databricks introduced unity catalog, a unified governance solution designed for data lakehouses. These connections provide access to complete the following actions: It provides centralized access control, auditing, lineage, and data discovery across. Since its launch several years ago unity catalog has. A catalog is the primary unit of data organization in the databricks unity catalog data governance model. | databricks documentation

what are catalogs in databricks? It provides centralized access control, auditing, lineage, and data discovery. It helps simplify security and governance of your data and ai assets by. Databricks recommends configuring dlt pipelines with unity catalog. It provides centralized access control, auditing, lineage, and data discovery across. What are catalogs in databricks? Therefore, in this blog, i intend to explain how to design a federated governance approach with databricks unity catalog and why it is scalable. Unity catalog is a unified governance solution for data and ai assets on azure databricks. This article gives an overview. Ingest raw data into a. Learn how to view, update, and delete catalogs in unity catalog using catalog explorer or sql commands. What are catalogs in databricks? These connections provide access to complete the following actions: It helps simplify security and governance of your data and ai assets by. They contain schemas, which in turn can contain tables, views, volumes, models, and. To address these challenges, databricks introduced unity catalog, a unified governance solution designed for data lakehouses. Find out how to enable unity catalog, create a catalog, assign permissions, and access unity. Unity catalog is a solution for data and ai governance built into the databricks data intelligence platform. This allows users to read from and write to multiple catalogs and schemas while. A catalog is the primary unit of data organization in the databricks unity catalog data governance model. Unity catalog provides a suite of tools to configure secure connections to cloud object storage. These connections provide access to complete the following actions: A catalog is the primary unit of data organization in the databricks unity catalog data governance model. The following is based on my. | databricks documentation

what are catalogs in databricks? It helps simplify security and governance of your data and ai assets by. Databricks recommends configuring dlt pipelines with unity catalog. Unity catalog (uc) is the foundation for all governance and management of data objects in databricks data intelligence platform. Pipelines configured with unity catalog publish all defined materialized views and streaming tables to. Learn how to configure and use unity catalog to manage data in your databricks workspace.An Ultimate Guide to Databricks Unity Catalog — Advancing Analytics

Unity Catalog Setup A Guide to Implementing in Databricks

Data governance overview Azure Databricks Microsoft Learn

Introducing Unity Catalog A Unified Governance Solution for Lakehouse

Privacera + Databricks Unity Catalog A Secure Combination for Open

Databricks Unity Catalog Robust Data Governance & Discovery

Introducing Unity Catalog A Unified Governance Solution for Lakehouse

Databricks Unity Catalog — Unified governance for data, analytics and AI

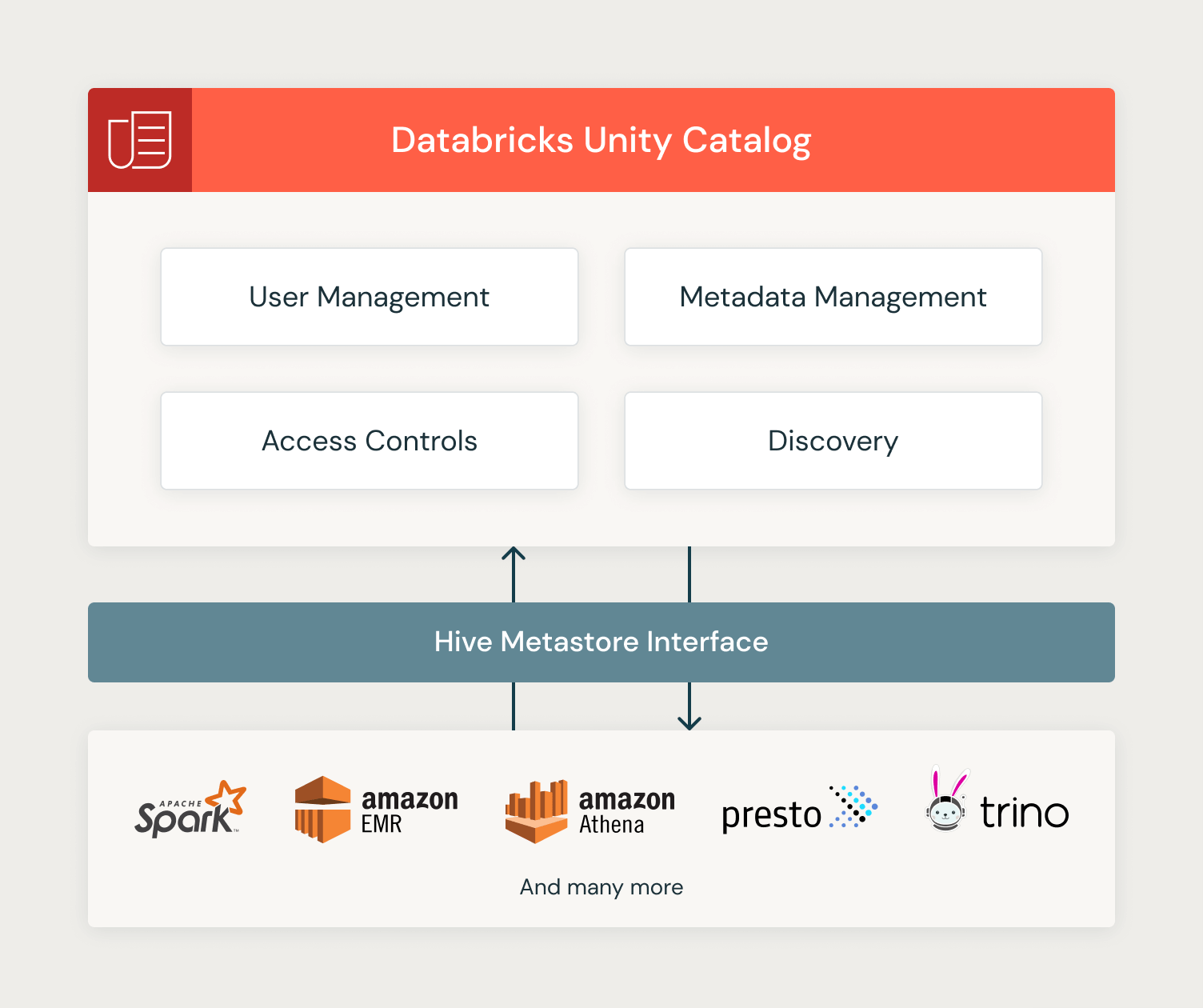

Extending Databricks Unity Catalog with an Open Apache Hive Metastore

Databricks Unity Catalog Einblicke in die wichtigsten Komponenten und

Unity Catalog Is A Unified Governance Solution For Data And Ai Assets On Databricks.

Unity Catalog Provides Centralized Access Control, Auditing, Lineage, And Data Discovery.videos Of Unity Catalog In Databricks Bing.com › Videoswatch Video17:29Databricks Unity Catalog:

First, We’re Thrilled To Announce That Dlt Pipelines Now Integrate Fully With Unity Catalog (Uc).

It Enables You To Discover, Access And Collaborate On Data And Ai Assets Across Any.

Related Post: